The LANGUAGE variable is broken for English as main language

by Leandro Lucarella on 2020- 11- 18 11:38 (updated on 2020- 11- 18 11:38)- with 0 comment(s)

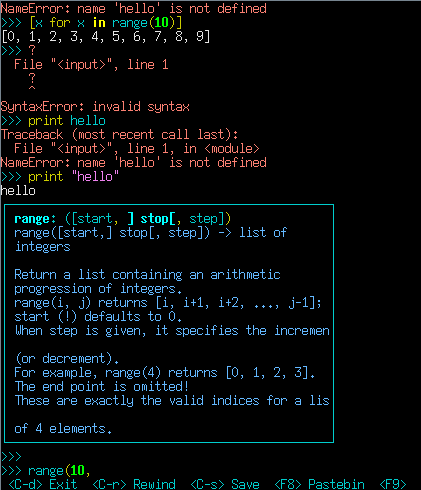

The LANGUAGE environment variable can accept multiple fallback languages (at least if your commands are using gettext), so if your main LANG is, say, es, but you also speak fr, then you can use LANGUAGE=es:fr.

But what happens when you main LANG is en, so for example your LANGUAGE looks like en:es:de? You'll notice some message that used to be in perfect English before using the multi-language fallback now seem to be shown randomly in es or de.

Well, it is not random. The thing is, since English tends to be the de-facto language for the original strings in a program, it looks like almost nobody provides an en translation, so when fallback is active, almost no programs will show messages in English.

For example, this is my Debian testing system with roughly 3.5K packages installed:

$ dpkg -l |wc -l 3522 $ ls /usr/share/locale/en/LC_MESSAGES/ | wc -l 12

Only 12 packages have a plain English locale. en_GB does a bit better:

$ ls /usr/share/locale/en_GB/LC_MESSAGES/ | wc -l 732

732 packages. This is still lower than both en and de:

$ ls /usr/share/locale/es/LC_MESSAGES/ | wc -l 821 $ ls /usr/share/locale/de/LC_MESSAGES/ | wc -l 820

The weird thing is packages as basic as psmisc (providing, for example, killall) and coreutils (providing, for example, ls) don't have an en locale, and psmisc doesn't provide es. This is why at some point it seemed like a random locale was being used. I had something like LANGUAGE=en_GB:en_US:en:es:de and I use KDE as my desktop environment. KDE seems to be correctly translated to en_GB, so I was seeing most of my desktop in English as expected, but when using killall, I got errors in German, and when using ls, I got errors in Spanish.

If you don't provide other fallback languages, gettext will automatically fall back to the C locale, which is the original strings embedded in the source code, which are usually in English, and this is why if you don't provide fallback languages (other than English at least), all will work in English as expected. Of course if you use C in your fallback languages, before any non-English language, then they will be ignored as the C locale should always be present, so that's not an option.

I find it very curious that this issue has almost zero visibility. At least my searches for the issue didn't throw any useful results. I had to figure it all out by myself like in the good old pre-stackoverflow times...

Note

I know is not a typical use case, as since almost all software use English for the C locale it hardly makes any sense to use fallback languages in practice if your main language is English. But theoretically it could happen, and providing an en translation is trivial.

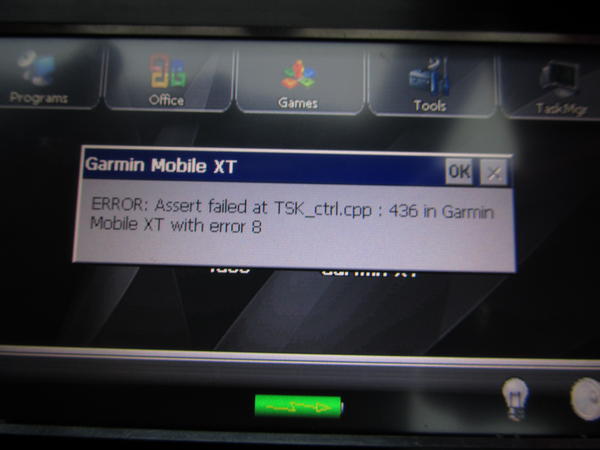

Elephone P9000

by Leandro Lucarella on 2020- 11- 18 11:10 (updated on 2020- 11- 18 11:10)- with 0 comment(s)

Note

This post is really old (May 2016), but was never published for some reason. I'm publishing it now just as an archeological artifact :)

I usually don't do reviews for anything, but I want to write a few points about this phone, in part for folks out there to know, but also as some sort of internal reminder of the things I've been finding.

I should say before anything else that I'm basically comparing this phone against my previous one, a Samsung Galaxy S4 (I9505) using CyanogenMod.

The Elephone P900 is a super tempting device. Here are the main reasons why I chosen this phone (in bold my hard requirements, in italics things I didn't really care about but it was a good opportunity to try out):

- It has 4GB of RAM and fast CPU (octacore)

- It supports memory expansion (micro SD up to 256GB 8-)

- It has a very high screen to body ratio (about 83%, for comparison the Nexus 5 has 71% and the iPhone 5s has 61%). I was looking for a 5 inch phone, so to go for a 5.5 inch one, the overall size of the phone had to be as small as possible.

- It has USB Type-C (I thought if I'm getting a new phone, better to have the new shiny no-non-sense connector)

- It comes with Android 6 (I want either that or to be supported by CM)

- Decent battery (3000mAh, which I expected to last for a full day of intensive use)

- It has at least one "navigation button" (I don't want to lose part of my screen with software buttons)

- It has a Sony camera sensor with f/2.0 and laser focus, which is supposed to be of decent quality really fast to do focus. That said, I read some reviews not speaking well about the camera

- It has a rouged back (my S4 was a bit slippery)

- It costs less than €250 (here in Germany)

- It has wireless charging and quick charging

- It has a fingerprint reader

- It has a quite high-res frontal camera (8 megapixel)

- It supports dual-SIM although the second SIM shares the slot with the memory expansion, so is not something I'll be able to use anyway

So, after using it for about a couple of days, these are my findings:

The good:

- The build quality is very nice, it really looks like a high end phone. More than my old S4 (which is made of plastic, while the P9000 is metallic).

- Is very light, even when it's a few grams extra compared to the S4, you can't really feel it. For offering 0.5 extra inches of screen is quite impressive.

- It is fast. I wasn't expecting to notice a difference with the S4 really, basically because I don't feel the S4 is slow. But you can tell the difference. The P9000 is snappier.

- It looks beautiful (at least for my minimalistic taste). I never care much about looks, but I really like this phone (much more than the S4).

- Despite the big screen and feeling a bit too big at first, the size seems manageable and the extra screen space is useful.

- Now having a fingerprint reader will probably be a requirement for my next phone. I encrypt my phone and use a longish password to unlock it. Being able to unlock it securely with just one touch is a huge gain.

- It can be rooted. It took me a while to find the right flasher for Linux (you need the latest version of it), but I could do it, and even TWRP is available for it already, which gives me some hope about better ROMs, and maybe even CM, appearing in the future.

The Bad:

- The fingerprint reader sucks. I've seen a video review complaining about it, and even for this guy complaining it worked much better than for me. I would say in my case it succeeds reading my fingerprint about less than 20% of the time. I even registered my fingerprint like five 5 times, using different finger positions and it still fails most of the time, and after 3 or 5 failures you have to wait 30 seconds before being able to retry.

- The screen is not bright enough for a sunny day. You can still see the screen, but it's not as bright as the one in my old S4.

- The camera pretty much sucks too. The f/2.0 I don't know where is it, pictures are always quite noisy. The auto-focus is not faster than my old S4. The sensor is supposed to be good, so I guess they just screwed it with the lenses. Or maybe is a firmware thing? But I doubt it.

- The sound really SUCKS. I never thought about it before. Even when I listen to music a lot, I never had a good year and never could pick up on bad quality recordings for example. Is a blessing. But with this phone I noticed. It sounds like crap (and I'm not talking about the speaker, which is understandable, I'm talking about plugging earphones). When I noticed I thought it might be the album. I tried another one, and another one, and finally I compared the same files in my old S4 and... Oh boy. This new phone's audio just SUCKS SO BAD. It's the phone. I would say this was the final deal breaker for me.

- The battery can't take an intense full day of usage. It's basically the same as my old S4 (and I want an improvement on this area). If I use it just for a few messages and most of the time inside with WiFi, it can last 2 full days. If I take it outside using mobile data, and listen to music during a hold day, it barely last for a day. If I add to that using maps and the GPS having the screen on more time, like when I'm traveling, it can barely last more than half a day.

- The Android version is missing some features that I thought it was pure Android (not CM), like the Ambient Display (shows notifications in a dimmed screen), the LiveDisplay (adjust the screen color temperature according to the time of the day) and the Do Not Disturb mode(s). The keyboard doesn't support swiping (major drawback for me).

- The touchscreen is not very sensitive. I can tell the difference with the S4. Maybe the one in the S4 is too sensitive, sometimes it reacts without even contacting the glass, but in the P9000 sometimes I feel I have to press the glass too much to get it reacting. Some gestures are harder to do because of this (like swipe-up to unlock).

- Only one navigation button. Even when is better than nothing, I found much more convenient having 3 navigation buttons like the S4 provides.

- No multicolor notification light. The navigation button on the bottom also serves as a notification light, but it has only one color and the frequency can't be configured either (AFAIK). The S4 has a multi-color led, which let you know what kind of notification is there before you even look at the screen.

- USB Type-C is not popular enough yet. Even when this is not the phone's fault, I realized we are not there yet. Micro USB cables are everywhere out there. You'll never miss one. With Type-C you better carry your cable everywhere or buy a bunch, because you are all alone now.

So, even when the external quality is amazing and, even when I never cared about looks, it looks extremely nice too, it looks like the low price tag has to come from somewhere, and that somewhere is the internal components, which seems like they are not the best.

Still the quality-price ratio is quite impressive IMHO, on paper you have the same specs as an iPhone 6, or Samsung S7, at less than half the price. But I think I prefer to spend an extra few bucks to get higher internal components qualities (specially with the sound), so I will probably return this phone and continue looking for one. Also I miss my CM too much. I think I will have to settle for an older phone that's is supported by CM.

TODO:

- Fingerprint unlock and screen gestures makes the phone never enter deep sleep, nice features but until it's fixed it might be better to disable them, at least when you know you'll need some juice.

- Fingerprint starts working better after some use (only 2 or 3 attempts are needed)

- WiFi consumes more battery than mobile data (WTF!?)

- RoDrIgUeZsTyLe™ MODPACK V1.1

- Sound is saved (probably is not amazing, but I don't notice the obvious creepiness anymore). Praise the Lord!

- Touchscreen is more sensitive, not as the S4 but definitely an appreciable improvement.

- Battery life improved a lot. One day of moderate activity (for me) and still about 65% battery left. I used the phone for 12 hours after fully charged, and my usage pattern was: about 6 hours outside (using mobile data), about 40 minutes of music playing + GPS working in high accuracy mode. The rest inside using WiFi. I also did some messaging and internet use, but nothing too intensive. BetterBatteryStats reports: 66% (~8h) deep sleep (which is still low, I wonder what this phone could last if it were more aggressive about going to deep sleep), 8% (~1h) screen on. Wifi running 100% of the time (~12h). Battery consumption average was 3%/h. My guess is that with a similar usage, the S4 I would probably ended the day with not more than 30% or 40% battery.

- Ways to improve the battery life and fix other stuff: Xposed framework, but not supported yet. For example with "Amplify Battery Extender" I could disable the wakelock for the fingerprint or NlpWakelock. Wakelock Terminator might also help (also needs Xposed)

- For now using DisableService to disable NlpService from MTK NLP Service, the location seems to keep working fine. Another option is to put the GPS in device-only mode to avoid the NPL service from running.

- Install Xposed using Eragon 2.0 ROM:

- Install Xposed Material installer (3.0 alpha4) http://forum.xda-developers.com/xposed/material-design-xposed-installer-t3137758

- Edit /etc/init.d/07permissive and comment out the sleep 60

- Install Xposed framework v82 (not a newer one otherwise settings will force close)

- Battery life saved via update from 2016-05-31. Fingerprint reader and WiFi don't keep the phone awake anymore (5~10% awake when screen is off with both enabled).

Simplicity

by Leandro Lucarella on 2016- 04- 01 22:58 (updated on 2016- 04- 01 22:58)- with 0 comment(s)

This is mostly an article I want to save for myself about simplicity. It was originally written by Mark Ramm in the context of a Python web framework I used (TurboGears). The original article seems to be gone, but you can still find it in the Archive.org's Wayback Machine.

Here is a transcription:

What is Simplicity?(May 31st, 2006 by Mark Ramm)Simplicity is knowing when one more rock would be too many, and one less rock would be too few. But it’s not just knowing the right number of rocks, it’s also knowing which rocks are right, and how to arrange them.

As Brad reminds us, simplicity is not achieved merely by making something easier, or less complex.

Take away all the complexity, all the difficulty, and all of the details from anything and what you are left with is not simple: it’s just boring.

On the other hand, Simplicity embraces exactly the right details, the right difficulties, the right complexity, but because everything is tied together in the right way, you are left with a sense of clarity, and a sense that everything belongs exactly where it is. Simplicity is achieved when everything means something.

In other words, simplicity is defined by what you add — clarity, purpose, and intentionality — not by what you remove.

For those of us who write software, simplicity is not a simple thing to learn. Writing the TurboGears book and working with the amazing group of people who contribute to the project has been a learning experience for me. Everybody is focused on making the web development simpler — and it’s amazing how much experience and depth of understanding is necessary to create a simple interface. It’s easy to build an interface that solves 80% of the problem, or an interface that solves 200% of the problem, but it is hard to solve just the right problem, and to do it in a clean, clear, way.

Of course, every project has warts, and TurboGears re-uses other projects which also have warts. So there’s no way I can say that TurboGears has arrived. But the will is there, and the journey sure has been productive for me.

Incredible Machine - Hurricane Heart Attacks

by Leandro Lucarella on 2015- 08- 25 08:12 (updated on 2015- 08- 25 08:12)- with 0 comment(s)

The Black Keys - Turn incompressible

by Leandro Lucarella on 2014- 05- 06 22:33 (updated on 2014- 05- 06 22:33)- with 0 comment(s)

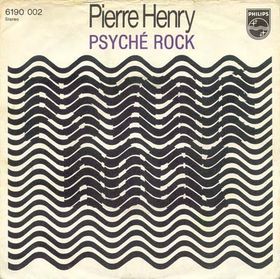

Maybe you heard about the new album from The Black Keys. Maybe you didn't. In any case, I don't want to talk about the album (which is good BTW), I want to talk about the album cover:

See how bad it looks? Now click on the image and see how good it looks (in terms of quality, the album cover is pretty ugly anyway :P). The thing is, this stupid pattern is very hard to compress, so even using a JPG quality of 90%, you get a quite big file size and a pretty crappy image quality (126KB for a 500x500 image is quite a lot, 294KB for PNG using compression 9). If you look at the big image, even the colors are different, so the image makes resizing algorithms also go nuts, the image looks darker (or is this just an ilusion because of the changed relationship between both colors?).

Try it yourself, download the image, resize it, save it with different formats and qualities.

Coincidence? I guess not.

The Day We Fight Back

by Leandro Lucarella on 2014- 02- 10 18:59 (updated on 2014- 02- 10 18:59)- with 0 comment(s)

On Anniversary of Aaron Swartz's Tragic Passing, Leading Internet Groups and Online Platforms Announce Day of Activism Against NSA Surveillance.

Participants including Access, Demand Progress, the Electronic Frontier Foundation, Fight for the Future, Free Press, BoingBoing, Reddit, Mozilla, ThoughtWorks, and more to come, will join potentially millions of Internet users to pressure lawmakers to end mass surveillance -- of both Americans and the citizens of the whole world.

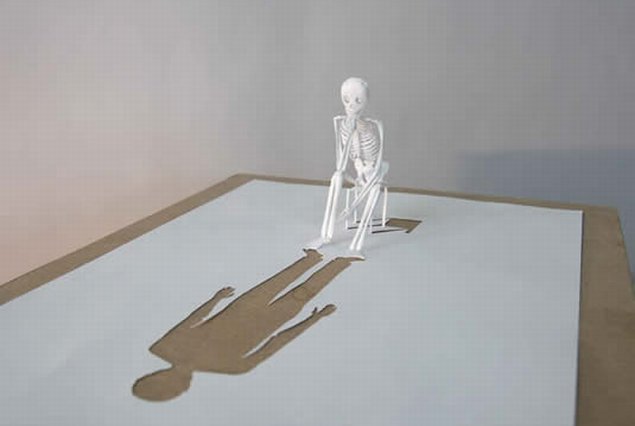

Oscar

by Leandro Lucarella on 2013- 12- 17 20:26 (updated on 2013- 12- 17 20:26)- with 0 comment(s)

First Flattr

by Leandro Lucarella on 2013- 11- 16 00:24 (updated on 2013- 11- 16 00:24)- with 0 comment(s)

9 months ago I decided to try Flattr. I created an account, put some money on it, started flattring and made myself flattrable. But nothing happened. Also sometimes you don't know if the people you are flattring will even reclaim your flattrs (in services that automatically provides flattr links).

Conclusion, I got quite disappointed. But today I see the light again, as I received my first and only flattr (for eventxx). Thanks whoever you are, anonymous hero, you brought hope again to humanity :P

Anyway, I'll try to give it a shot again, and try to keep the wheel moving.

You should do that too.

Radiohead Nude via zx80+printer+scanner+hdd

by Leandro Lucarella on 2013- 04- 13 20:53 (updated on 2013- 04- 13 20:53)- with 0 comment(s)

Fucking awesome, be patient for the first minute...

The Money Myth

by Leandro Lucarella on 2013- 02- 21 21:13 (updated on 2013- 02- 21 21:13)- with 0 comment(s)

Flattr

by Leandro Lucarella on 2013- 02- 17 21:02 (updated on 2013- 02- 17 21:02)- with 0 comment(s)

I learned that Flattr, a social micropayment service that I've been overlooking for a long time, was created by some of the founders of The Pirate Bay after watching TPB AFK.

I'm trying to donate (or pay) more and more to people using alternative means to produce stuff, like artists using CC licenses or software developers working with free licenses (I already bought a copy of the movie :). I feel like I have to get more involved to keep the wheel spinning and help people keep doing stuff, cutting the intermediaries as much as possible.

I don't know why I had some resistance to get into Flattr, maybe is because Facebook made me hate anything that have a thumbs up, or a +1 or counter, but knowing the history behind it a little better encouraged me to finally get an account and start using Flattr. And is really nice. Is much easier than going through Paypal each time a want to give some bucks to someone, and allows you to even make very small donations.

I recommend to see this introductory video:

I also decided to flattr-ize all my website, each project individually and even this blog. Not exactly for economical reasons (I think very few people know about anything I do so I don't really expect to earn any money from this), but as another way to spread the word. Also, I'm really curious about what I just said, I really wonder if there is someone out there grateful enough to make even a micro-donation to anything I do or did :)

Anyway, I would like to recommend to do the same, if you do something great, add a Flattr button to what you do, and if you like something out there and it has a Flattr, click it. Let's see if it helps to keep the wheel spinning :)

TPB AFK

by Leandro Lucarella on 2013- 01- 13 20:01 (updated on 2013- 01- 13 20:01)- with 0 comment(s)

Toshiba Satellite/Portege Z830/R830 frequency lock (and BIOS upgrade)

by Leandro Lucarella on 2012- 11- 28 23:21 (updated on 2012- 11- 28 23:21)- with 0 comment(s)

Fuck! I bought this extremely nice ultrabook, the Toshiba Satellite Z830-10J, about an year ago, and I've been experiencing some problems with CPU frequency scaling.

At one point I looked and looked for kernel bugs without much success. I went through several kernel updates in the hope of this being fixed, but never happened.

It seemed that the problem wasn't so bad after all, because I only got the CPU frequency locked down to the minimum when using the ondemand scaling governor, but the conservative was working apparently OK.

Just a little more latency I thought, is not that bad.

Recently I received an update on a related bug and I thought about giving it another shot. This mentioned something about booting with processor.ignore_ppc=1 to ignore some BIOS warning about temperature to overcome some faulty BIOS, so I thought on trying that.

But before doing, if this were a real BIOS problem, I thought about looking for some BIOS update. And there was one. The European Toshiba website offered only a Windows program to do the update though, but fortunately I found in a forum a suggestion about using the non-European BIOS upgrade instead, which was provided also as an ISO image. The problem is I don't have a CD-ROM, but that shouldn't stop me, I still have USB sticks and hard-drives, how hard could it be? I failed with UNetbootin but quickly found a nice article explaining how to boot an ISO image directly with grub.

BIOS upgraded, problem not fixed. So I was a about to try the kernel parameter when I remembered I saw some other article when googling desperately for answers suggesting changing some BIOS options to fix a similar problem.

So I though about messing with the BIOS first instead. The first option I saw that looked a little suspicious was in:

PowerManagement

-> BIOS Power Management

-> Battery Save Mode (using custom settings)

-> Processor Speed

<Low>

That is supposed to be only for non-ACPI capable OS, so I thought it shouldn't be a problem, but I tried with <High> instead.

WOW!!!

I start noticing the notebook booting much faster, but I thought maybe it was all in my mind...

But no, then my session opened way faster too, and everything was extremely faster. I think maybe about twice as fast. Everything feels a lot more responsive too. I can't believe I spend almost an year with this performance penalty. FUCKING FAULTY BIOS. I didn't make any battery life comparisons yet, but my guess is everything will go well, because it should still consume very little power when idle.

Anyway, lesson learned:

Less blaming to the kernel, more blaming to the hardware manufacturers.

But I still want to clarify that I love this notebook. I found it a perfect combination between features, weight and battery life, and now that it runs twice as fast (at least in my brain), is even better.

Hope this is useful for someone.

SecurityKiss + Dante == bye bye censorship

by Leandro Lucarella on 2012- 11- 25 20:00 (updated on 2012- 11- 28 21:28)- with 3 comment(s)

I live in Germany, and unfortunately there is something in Germany called GEMA, which basically censor any content that "might have copyrighted music".

Among the sites being censored are Grooveshark (completely banned) and YouTube (only banned if the video might have copyrighted music according to some algorithm made by Google). Basically this is because GEMA want these company to pay royalties for each time some copyrighted song get streamed). AFAIK Grooveshark don't want to pay at all, and Google want to pay a fixed fee (which is what it does in every other country), because it makes no sense to do otherwise, since anyone can just endlessly get a song streamed over and over again just to be paid.

Even when the model is debatable, there is a key aspect and why I call this censorship: not all the banned content is copyrighted or protected by GEMA.

- In Grooveshark there are plenty of bands that release their music using more friendly license, like CC.

- There are official videos posted in YouTube by the bands themselves and embedded in the band official website that is banned by GEMA.

- There are videos in YouTube that doesn't have copyrighted music at all, but they have to cover their asses and ban everything suspicious just in case.

- The personal videos that do have copyrighted music get banned completely, not only muted.

These are just the examples that pop on my mind now.

There are plenty of ways to bypass this censorship and they are the only way to access legal content in Germany that gets unfairly banned, not only harming the consumers, but also the artists, because most of the time having their music exposed in YouTube only spreads the word and do more good than harm.

HideMyAss is a popular choice for a web proxy. But I like SecurityKiss, a free VPN (up to 300MB per day), because it gives a more comprehensive solution.

But here comes the technical chalenge! :) I don't want to route all my traffic through the VPN, or to have to turn the VPN on and off again each time I want to see the censored content. What I want is to see some websites through the VPN. A challenge that proved to be harder than I initially thought and why I'm posting it here.

So the final setup I got working is:

- OpenVPN to use SecurityKiss free VPN service.

- Dante Socks Server to route any application capable of using a socks server through the VPN.

- FoxyProxy Firefox extension to selectively route YouTube and Grooveshark through the Socks proxy.

And here is how I did it (in Ubuntu 12.10):

OpenVPN server

Install the package:

sudo apt-get install openvpn

Get the configuration bundle generated for you account in the control panel and then create a /etc/openvpn/sk.conf file with this content:

client dev tunsk proto udp # VPN server IP : PORT # (pick the server you want from the README file in the bundle) remote 178.238.142.243 123 nobind ca /etc/ssl/private/openvpn-sk/ca.crt cert /etc/ssl/private/openvpn-sk/client.crt key /etc/ssl/private/openvpn-sk/client.key comp-lzo yes persist-key persist-tun user openvpn group nogroup auth-nocache script-security 2 route-noexec route-up "/etc/openvpn/sk.setup.sh up" down "/usr/bin/sudo /etc/openvpn/sk.setup.sh down"

Install the certificate and key files from the bundle in /etc/ssl/private/openvpn-sk/ with the names specified in the sk.conf file.

Create the tun device:

mknod /dev/net/tunsk c 10 200

Start the VPN at system start (optional):

echo 'AUTOSTART="sk"' >> /etc/default/openvpn

Add the openvpn system user:

adduser --system --home /etc/openvpn openvpn

Now we need to route some specific traffic only through the VPN. I choose to discriminate traffic by the uid/gid of the application that generated it. So with the route-up and down script we will do all the special routing. I also want my default route table to be untouched, that's why I used route-noexec. Here is how the /etc/openvpn/sk.setup.sh script looks for me:

#!/bin/sh

# Based on:

# http://serverfault.com/questions/345111/iptables-target-to-route-packet-to-specific-interface

#exec >> /tmp/log

#exec 2>> /tmp/log.err

#set -x

# Config

uid=skvpn

gid=skvpn

mark=100

table=$mark

priv_dev=br-priv

env_file="/var/run/openvpn.sk.env"

umark_rule="OUTPUT -t mangle -m owner --uid-owner $uid -j MARK --set-mark $mark"

gmark_rule="OUTPUT -t mangle -m owner --gid-owner $gid -j MARK --set-mark $mark"

masq_rule="POSTROUTING -t nat -o $dev -j SNAT --to-source $ifconfig_local"

up()

{

# Save environment

env > $env_file

# Route all traffic marked with $mark through route table $table

ip rule add fwmark $mark table $table

# Make all traffic go through the VPN gateway in route table $table

ip route add table $table default via $route_vpn_gateway dev $dev

# Except for the internal traffic

ip route | grep "dev $priv_dev" | \

xargs -n1 -d'\n' echo ip route add table $table | sh

# Flush route tables cache

ip route flush cache

# Mark packets originated by processes with owner $uid/$gid with $mark

iptables -A $umark_rule

iptables -A $gmark_rule

# Prevent the packets sent over $dev getting the LAN addr as source IP

iptables -A $masq_rule

# Relax the reverse path source validation

sysctl -w "net.ipv4.conf.$dev.rp_filter=2"

}

down()

{

# Restore and remove environment

. $env_file

rm $env_file

# Since the device is already removed, there is no need to clean

# anything that was referencing the device because it was already

# removed by the kernel.

# Delete iptable rules

iptables -D $umark_rule

iptables -D $gmark_rule

# Delete route table and rules for lookup

ip rule del fwmark $mark table $table

# Flush route tables cache

ip route flush cache

}

if test "$1" = "up"

then

up

elif test "$1" = "down"

then

down

else

echo "Usage: $0 (up|down)" >&2

exit 1

fi

I hope this is clear enough. Finally we need to add the skvpn user/group (for which all the traffic will be routed via the VPN) and let the openvpn user run the setup script:

sudo adduser --system --home /etc/openvpn --group skvpn sudo visudo

In the editor, add this line:

openvpn ALL=(ALL:ALL) NOPASSWD: /etc/openvpn/sk.setup.sh

Now if you do:

sudo service openvpn start

You should get a working VPN that is only used for processes that runs using the user/group skvpn. You can try it with:

sudo -u skvpn wget -qO- http://www.securitykiss.com | grep YOUR.IP

Besides some HTML, you should see the VPN IP there instead of your own (you can check your own by running the same without sudo -u skvpn).

Dante Socks Server

This should be pretty easy to configure, if it weren't for Ubuntu coming with an ancient (1.x when there is a 1.4 beta already) BROKEN package. So to make it work you have to compile it yourself. The easiest way is to get a sightly more updated package from Debian experimental. Here is the quick recipe to build the package, if you want to learn more about the details behind this, there is always Google:

cd /tmp for suffix in .orig.tar.gz -3.dsc -3.debian.tar.bz2 do wget http://ftp.de.debian.org/debian/pool/main/d/dante/dante_1.2.2+dfsg$suffix done sudo apt-get build-dep dante-server dpkg-source -x dante_1.2.2+dfsg-3.dsc cd dante_1.2.2+dfsg dpkg-buildpackage -rfakeroot cd .. dpkg -i /tmp/dante-server_1.2.2+dfsg-3_amd64.deb

Now you can configure Dante, this is my configuration file as an example, it just allow unauthenticated access to all clients in the private network:

logoutput: syslog

internal: 10.1.1.1 port = 1080

external: tunsk

clientmethod: none

method: none

user.privileged: skvpn

user.unprivileged: skvpn

user.libwrap: skvpn

client pass {

from: 10.1.1.0/24 port 1-65535 to: 0.0.0.0/0

log: error # connect disconnect

}

client pass {

from: 10.1.1.0/24 port 1-65535 to: 0.0.0.0/0

}

#generic pass statement - bind/outgoing traffic

pass {

from: 0.0.0.0/0 to: 0.0.0.0/0

command: bind connect udpassociate

log: error # connect disconnect iooperation

}

#generic pass statement for incoming connections/packets

pass {

from: 0.0.0.0/0 to: 0.0.0.0/0

command: bindreply udpreply

log: error # connect disconnect iooperation

}

I hope you get the idea...

Now just start dante:

sudo service danted start

And now you have a socks proxy that output all his traffic through the VPN while any other network traffic goes through the normal channels!

FoxyProxy Addon

Setting up FoxyProxy should be trivial at this point (just create a new proxy server pointing to dante and set it as SOCKS v5), but just as a pointer, here are some example rules (set them as Whitelist and Regular Expression):

^https?://(.*\.)?youtube\.com/.*$ ^https?://(.*\.)?grooveshark\.com/.*$

PulseAudio flat volumes

by Leandro Lucarella on 2012- 11- 06 20:57 (updated on 2012- 11- 06 20:57)- with 0 comment(s)

Just a quick note because it took me ages to find out how to do it.

I don't really use the feature of pulseaudio that gives every application their own volume instead of manipulating the master volume directly, and lately it became more and more a problem, as I want to use applications like mpd or xbmc that allow remote controlling, and for that having separate volumes makes no sense.

I managed to fix it in mpd once, by using a mixed setup, using pulse as output, but the hardware alsa mixer, but for xbmc I couldn't find a way.

So that made me think if I really wanted the split volumes thingy, and the answer was no. After looking for hours for how to do it, the answer is pretty trivial. Just edit /etc/pulse/daemon.conf and change the flat-volumes option to yes.

You are welcome.

George Carlin on religion and God

by Leandro Lucarella on 2012- 10- 19 13:10 (updated on 2012- 10- 19 13:10)- with 0 comment(s)

A little old, but always fun!

F.A.T.

by Leandro Lucarella on 2012- 10- 15 10:44 (updated on 2012- 10- 15 22:40)- with 0 comment(s)

Reminds me a little of The Yes Men.

Update

You might want to take a look at the other videos from the PBS Off Book series if you liked this one.

Declassified U.S. Nuclear Test Film #55

by Leandro Lucarella on 2012- 10- 09 10:11 (updated on 2012- 10- 09 13:11)- with 0 comment(s)

Update

I'm sorry, youtube related feature is too dangerous :P. This is a timeline of all the nuclear bombs dropped between 1945 and 1998. It starts slow, but don't worry, it will speed up to reach the 2000+ bombs dropped in that period, more than 50% by USA. Is funny they use "might have weapons of mass destruction" as an excuse to invade countries when they are by far the country that dropeed the most nuclear bombs. Maybe they dropped them all and now they have none :P

Stuart Murdoch stake on Spotify and music streaming companies

by Leandro Lucarella on 2012- 10- 04 22:45 (updated on 2012- 10- 04 22:45)- with 0 comment(s)

OK, first of all, this is pretty old (more than one year) but I just bumped into it and it seems interesting enough for me to post it.

Belle & Sebastian's singer, Stuart Murdoch, have posted his mind about Spotify and music streaming services, which apparently are ripping off artists way worse even than traditional record companies (see graph below).

I'll transcribe the part of the post found most interesting (well, it's actually almost the whole post) for convenience (bold added by me), but you can read the whole post for unbiased and complete information :)

[...] Ok, now my point, and probably my only important point: I’m certainly not against ‘the generation who no longer pay for music.’ That horse has bolted. And hey, I like that horse! It’s free and young and happy and doing its horsey thing.

What has had me conflicted is Spotify itself. Overnight, this thing appeared called Spotify, claiming it was a great idea, innovative, the saviour of the industry. From what I can gather, and no one has been able to tell me differently, it’s financed by a gathering of the top (ie. richest) people, from the top (ie. richest) record labels.

Overnight, the whole Belle And Sebastian back catalogue became available to stream, for anybody, for free, for good. We weren’t asked about it.

“How were you not asked?” I can imagine you would say. That’s exactly what I asked the record label. Their answer was not that informative. They mumbled something about a distribution company, that was under some umbrella; that it wasn’t up to them.

Can I just stress that Rough Trade is certainly not one of the aforementioned ‘richest’ record companies. I feel a bit bad for them. I’m gathering that they thought they had nothing to lose with the Spotify thing, that they had to try something new. (Kids, if there’s a less viable career choice than ‘independent recording artist’ at the minute, I would certainly say it was ‘independent record label.’)

Anyway, that’s enough of the angst. I’ve said it to the rest of the band, and I’ll say it again, “just because we’re in a band, it doesn’t make it a bloody pension plan”. We’ve had, and continue to have, a brilliant time making music and playing music and dreaming, and just about getting away with it. If it just got harder, then that’s because it should be hard. I think in the end it will make the music, the art, better.

I’m not even so much against Spotify. If they can get their model right, ie pay the bands something approaching appropriate amounts, then it will be all ok. I’m ready to throw my lot in with them; I mean, I use it now. And if I was 19 I would have used it too. (Would have used it to decide which vinyl/music to buy/see, as I’m sure lots of people still do)

It just seemed rich of them that they decided to charge everyone. They lured everyone in with ‘Our’ music (the royal ‘Our’), which they didn’t pay for, and now, probably because a shareholder somewhere is sitting in a Porsche, crying for a dividend, they’re going to charge money in our name. And I will eat my beloved black hat if we ever see a share. [...]

Another very interesting bit of information is the one provided in one of the comments, a nice graph about how much money artists get according to the distribution method, which I will also put here for convenience (I hope the author don't mind).

It would be nice to see an update including more open-license friendly services like Bandcamp, Magnatunes or Jamendo.

The hipsterest garage sale ever

by Leandro Lucarella on 2012- 10- 02 18:37 (updated on 2012- 10- 02 18:37)- with 0 comment(s)

Firefox advertisment in Berlin's public transport

by Leandro Lucarella on 2012- 10- 01 17:14 (updated on 2012- 10- 01 23:01)- with 0 comment(s)

Translation of e-mails using Mutt

by Leandro Lucarella on 2012- 09- 24 12:45 (updated on 2012- 10- 02 12:58)- with 0 comment(s)

Update

New translation script here, see the bottom of the post for a description of the changes.

I don't like to trust my important data to big companies like Google. That's why even when I have a GMail, I don't use it as my main account. I'm also a little old fashion for some things, and I like to use Mutt to check my e-mail.

But GMail have a very useful feature, at least it became very useful since I moved to a country which language I don't understand very well yet, that's not available in Mutt: translation.

But that's the good thing about free software and console programs, they are usually easy to hack to get whatever you're missing, so that's what I did.

The immediate solution in my mind was: download some program that uses Google Translate to translate stuff, and pipe messages through it using a macro. Simple, right? No. At least I couldn't find any script to do the translation, because Google Translate API is now paid.

So I tried to look for alternatives, first for some translation program that worked locally, but at least in Ubuntu's repositories I couldn't find anything. Then for online services alternatives, but nothing particularly useful either. So I finally found a guy that, doing some Firebuging, found how to use the free Google translate service. Using that example, I put together a 100 SLOC nice general Python script that you can use to translate stuff, piping them through it. Here is a trivial demonstration of the script (gt, short for Google Translate... Brilliant!):

$ echo hola mundo | gt hello world $ echo hallo Welt | gt --to fr Bonjour tout le monde

And here is the output of gt --help to get a better impression on the script's capabilities:

usage: gt [-h] [--from LANG] [--to LANG] [--input-file FILE]

[--output-file FILE] [--input-encoding ENC] [--output-encoding ENC]

Translate text using Google Translate.

optional arguments:

-h, --help show this help message and exit

--from LANG, -f LANG Translate from LANG language (e.g. en, de, es,

default: auto)

--to LANG, -t LANG Translate to LANG language (e.g. en, de, es, default:

en)

--input-file FILE, -i FILE

Get text to translate from FILE instead of stdin

--output-file FILE, -o FILE

Output translated text to FILE instead of stdout

--input-encoding ENC, -I ENC

Use ENC caracter encoding to read the input (default:

get from locale)

--output-encoding ENC, -O ENC

Use ENC caracter encoding to write the output

(default: get from locale)

You can download the script here, but be warned, I only tested it with Python 3.2. It's almost certain that it won't work with Python < 3.0, and there is a chance it won't work with Python 3.1 either. Please report success or failure, and patches to make it work with older Python versions are always welcome.

Ideally you shouldn't abuse Google's service through this script, if you need to translate massive texts every 50ms just pay for the service. For me it doesn't make any sense to do so, because I'm not using the service differently, when I didn't have the script I just copy&pasted the text to translate to the web. Another drawback of using the script is I couldn't find any way to make it work using HTTPS, so you shouldn't translate sensitive data (you shouldn't do so using the web either, because AFAIK it travels as plain text too).

Anyway, the final step was just to connect Mutt with the script. The solution I found is not ideal, but works most of the time. Just add these macros to your muttrc:

macro index,pager <Esc>t "v/plain\n|gt|less\n" "Translate the first plain text part to English" macro attach <Esc>t "|gt|less\n" "Translate to English"

Now using Esc t in the index or pager view, you'll see the first plain text part of the message translated from an auto-detected language to English in the default encoding. In the attachments view, Esc t will pipe the current part instead. One thing I don't know how to do (or if it's even possible) is to get the encoding of the part being piped to let gt know. For now I have to make the pipe manually for parts that are not in UTF-8 to call gt with the right encoding options. The results are piped through less for convenience. Of course you can write your own macros to translate to another language other than English or use a different default encoding. For example, to translate to Spanish using ISO-8859-1 encoding, just replace the macro with this one:

macro index,pager <Esc>t "v/plain\n|gt -tes -Iiso-8859-1|less\n" "Translate the first plain text part to Spanish"

Well, that's it! I hope is as useful to you as is being to me ;-)

Update

Since picking the right encoding for the e-mail started to be a real PITA, I decided to improve the script to auto-detect the encoding, or to be more specific, to try several popular encodings.

So, here is the help message for the new version of the script:

usage: gt [-h] [--from LANG] [--to LANG] [--input-file FILE]

[--output-file FILE] [--input-encoding ENC] [--output-encoding ENC]

Translate text using Google Translate.

optional arguments:

-h, --help show this help message and exit

--from LANG, -f LANG Translate from LANG language (e.g. en, de, es,

default: auto)

--to LANG, -t LANG Translate to LANG language (e.g. en, de, es, default:

en)

--input-file FILE, -i FILE

Get text to translate from FILE instead of stdin

--output-file FILE, -o FILE

Output translated text to FILE instead of stdout

--input-encoding ENC, -I ENC

Use ENC caracter encoding to read the input, can be a

comma separated list of encodings to try, LOCALE being

a special value for the user's locale-specified

preferred encoding (default: LOCALE,utf-8,iso-8859-15)

--output-encoding ENC, -O ENC

Use ENC caracter encoding to write the output

(default: LOCALE)

So now by default your locale's encoding, utf-8 and iso-8859-15 are tried by default (in that order). These are the defaults that makes more sense to me, you can change the default for the ones that makes sense to you by changing the script or by using -I option in your macro definition, for example:

macro index,pager <Esc>t "v/plain\n|gt -IMS-GREEK,IBM-1148,UTF-16BE|less\n"

Weird choice of defaults indeed :P

The day CouchSurfing died

by Leandro Lucarella on 2012- 09- 13 16:08 (updated on 2012- 09- 14 13:54)- with 0 comment(s)

Some time ago CouchSurfing announced that they will become a socially responsible B-Corporation. In case you forgot about it, here is what the creators of this utopic project said then:

I believed them, and when everybody went bananas about it I thought there was some extremism and overreaction. After all, you need money to maintain the structure for a service like CS and if this change would help, it was fine with me as long as the spirit of the community was the same.

Unfortunately, now I think all these people were right. A few days ago CS announced a change in the terms of usage and privacy policy. The new ones include terms as stupid and abusive as:

5.3 Member Content License. If you post Member Content to our Services, you hereby grant us a perpetual, worldwide, irrevocable, non-exclusive, royalty-free and fully sublicensable license to use, reproduce, display, perform, adapt, modify, create derivative works from, distribute, have distributed and promote such Member Content in any form, in all media now known or hereinafter created and for any purpose, including without limitation the right to use your name, likeness, voice or identity.

They also removed all mention about being a socially responsible B-Corp, so, where is this heading at? I don't have a Facebook account because I appreciate my privacy and feel like FB never cared about it (among other things), but this terms of CS makes FB looks like the EFF!

Here is one of the many related discussions about the issue inside CS forums:

http://www.couchsurfing.org/group_read.html?gid=7621&post=13000298

People are even making complaints in their's respective country data protection and privacy agencies. But I see little sense in it, at least from a point of view of being part of CS. Even if they fix now the terms to be more reasonable, I don't want to be part of it any more.

So, from tomorrow all the content you leave in CS will be theirs forever, irrevocably. Unfortunately there is no way to opt out, or keep the old content under the old terms, so they give me no option but to remove all the content I don't want to give them perpetual, irrevocable, sublicensable, etc. rights to. And that's what I'm doing right now.

I think for now I will keep my account open (with fake information about me, even when I'm violation the terms and conditions which says I should provide truthful information about me) because there is still a great community behind it and I don't want to loose all contact with it. But my plan is to start using BeWelcome.org instead, hoping they don't eventually follow the same path CS did (their website is open source so at least there is a chance to clone the service if they do).

So, thanks for all the good times CS, and RIP!

Update

It looks like CS needs more time to see the situation, in the terms and conditions page now says the new terms are going to be applicable starting on the 21st instead of the 14th of September.

Also, the issue hit the media, at least in Germany (Google translate is your friend):

- http://www.fr-online.de/digital/datenschutz-couchsurfing-will-nutzerdaten-weitergeben,1472406,17241654.html

- http://www.zeit.de/digital/datenschutz/2012-09/couchsurfing-nutzungsbedingungen-datenschutz

- http://www.heise.de/newsticker/meldung/Datenschuetzer-kritisiert-neue-Nutzungsbedingungen-von-CouchSurfing-org-1706833.html

- http://www.bfdi.bund.de/DE/Oeffentlichkeitsarbeit/Pressemitteilungen/2012/18_CouchSurfing.html?nn=408908

Anyway, as I said before, even if they fix the terms, is game over for me, all trust in CS being a community rather than just another company trying to do data mining is gone.

Escaleascensor mecánico

by Leandro Lucarella on 2012- 08- 09 13:29 (updated on 2012- 08- 09 13:29)- with 0 comment(s)

Está en el edificio donde está la Embajada Argentina en Berlín.

Release: Status Area Display Blanking Applet 1.0 for Maemo

by Leandro Lucarella on 2012- 08- 05 12:54 (updated on 2012- 08- 05 12:54)- with 0 comment(s)

Finally 1.0 is here, and in Extras-devel! The only important change since last release is a bug fix that prevented display blanking inhibition from properly work in devices configured with a display blanking timeout of less than 30 seconds (thanks cobalt1 for the bug report).

For more information and screenshots, you can visit the website.

You can download this release (binary package and sources) from here:

- https://llucax.com.nyud.net/proj/sadba/files/1.0/

- http://maemo.org/packages/view/status-area-displayblanking-applet/

But now you just might want to simply install it using the application manager.

You can also get the source from the git repository:

https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

Release: Status Area Display Blanking Applet 0.9 (beta) for Maemo

by Leandro Lucarella on 2012- 07- 30 22:35 (updated on 2012- 07- 30 22:35)- with 0 comment(s)

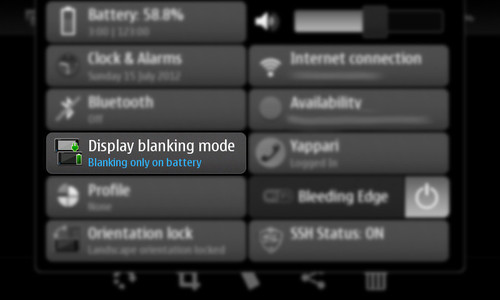

Final beta release for the Status Area Display Blanking Applet. Changes since last release:

- Show a status icon when display blanking is inhibited.

- Improve package description and add icon for the Application Manager.

- Add a extended description for display blanking modes.

- Update translation files.

- Code cleanup.

Also now the applet have a small home page and upload to Extras is on the way!

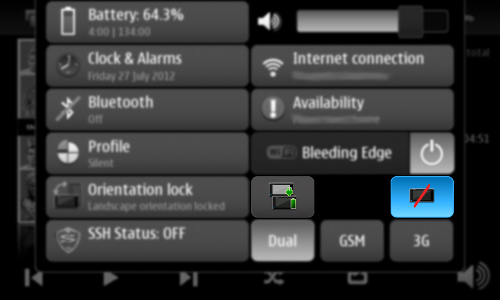

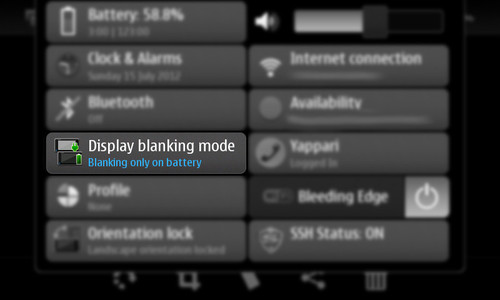

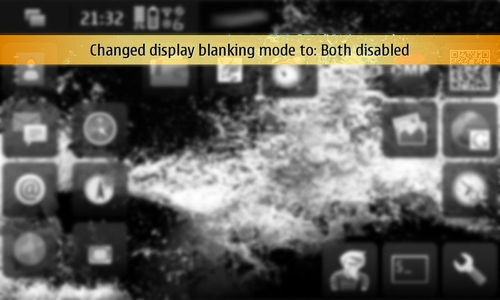

This is how this new version looks like:

You can download this 0.9 beta release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.9/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

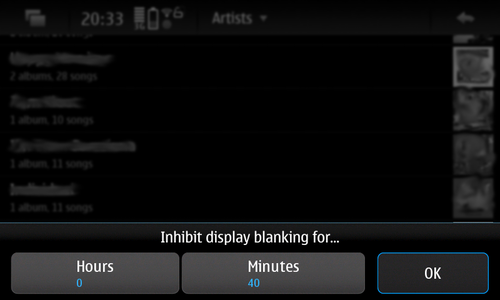

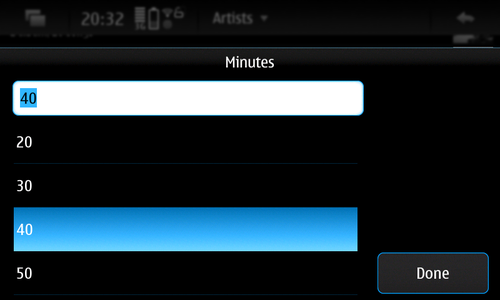

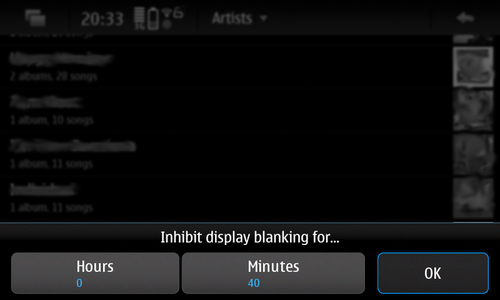

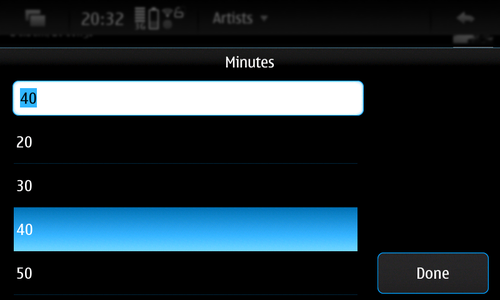

Release: Status Area Display Blanking Applet 0.5 for Maemo

by Leandro Lucarella on 2012- 07- 29 18:55 (updated on 2012- 07- 29 18:55)- with 0 comment(s)

New pre-release for the Status Area Display Blanking Applet. New timed inhibition button that inhibit display blanking for an user-defined amount of time. Also there's been some code cleanup since last release.

You can download this 0.5 pre-release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.5/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

Release: Status Area Display Blanking Applet 0.4 for Maemo

by Leandro Lucarella on 2012- 07- 27 18:12 (updated on 2012- 07- 27 18:12)- with 0 comment(s)

New pre-release of my first Maemo application: The Status Area Display Blanking Applet. Now you inhibit display blanking without changing the display blanking mode. The GUI is a little rough compared with the previous version but it works. :)

You can download this 0.4 pre-release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.4/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

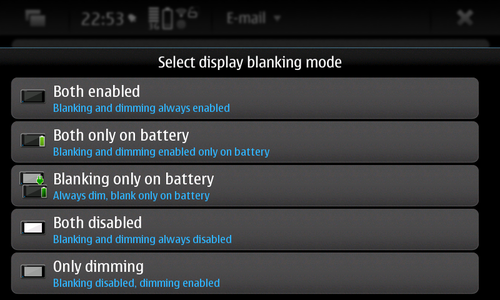

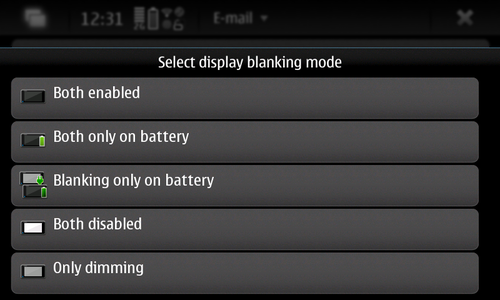

Release: Status Area Display Blanking Applet 0.3 for Maemo

by Leandro Lucarella on 2012- 07- 26 10:51 (updated on 2012- 07- 27 18:13)- with 0 comment(s)

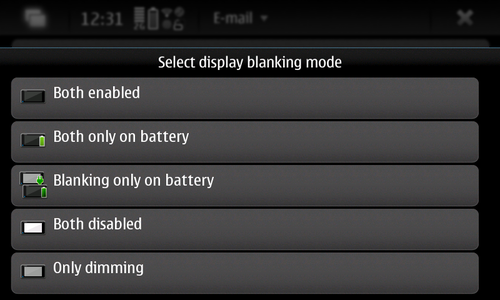

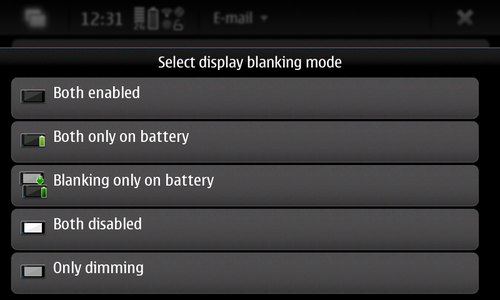

New pre-release of my first Maemo application: The Status Area Display Blanking Applet. Now you can pick whatever blanking mode you want instead of blindly cycling through all available modes, as it was in the previous version.

You can download this 0.3 pre-release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.3/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

Release: Status Area Display Blanking Applet 0.2 for Maemo

by Leandro Lucarella on 2012- 07- 23 09:56 (updated on 2012- 07- 23 09:56)- with 0 comment(s)

Second pre-release of my first Maemo application: The Status Area Display Blanking Applet. No big changes since the last release just code cleanup and a bugfix or new features (depends on how you see it). Now the applet monitors changes on the current configuration, so if you change the display blanking mode from settings (or by any other means), it will be updated in the applet too.

You can download this 0.2 pre-release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.2/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Please feel free to leave your comments and suggestions here or in the Maemo Talk Thread..

Release: Status Area Display Blanking Applet 0.1 for Maemo

by Leandro Lucarella on 2012- 07- 15 20:09 (updated on 2012- 07- 15 20:09)- with 0 comment(s)

Hi, I just wanted to announce the pre-release of my first Maemo "application". The Status Area Display Blanking Applet let you easily change the display blanking mode right from the status menu, without having to go through the settings.

This is specially useful if you have a short blanking time when you use applications that you want to look at for a long time without interacting with the phone and don't inhibit display blanking by themselves (for example a web browser, image viewer or some GPS applications).

You can download this 0.1 pre-release (binary package and sources) from here: https://llucax.com.nyud.net/proj/sadba/files/0.1/

You can also get the source from the git repository: https://git.llucax.com/w/software/sadba.git

Here are some screenshots (the application is highlighted so you can spot it more easily :) ):

Please feel free to leave your comments and suggestions.

I'll upload the package to extras-devel when I have some time to learn the procedure.

Save Peter Sundes from jail

by Leandro Lucarella on 2012- 07- 14 20:06 (updated on 2012- 07- 14 20:06)- with 0 comment(s)

So, Peter Sundes from The Pirate Bay has been convicted to 1 year prison and 11 million euro. He lost the appeal too, so now he is looking for a last resort, a plea for pardon, a procedure where you can get a judicial sentencing undone by the political administration in exceptional circumstances.

The plea for pardon is not serious in the sense that he is not really doing so, he is denouncing an extremely corrupt and absurd trial. You can read the plea and find out, is long but really interesting how the trial makes no sense (besides what's your stand on file sharing, copyright, etc.).

If you believe the trial was unfair, you can sign this petition, it will probably be completely ignored, but hey, it only takes 2 seconds, worth trying.

Release: Mutt with NNTP Debian package 1.5.21-5nntp3

by Leandro Lucarella on 2012- 07- 05 19:59 (updated on 2012- 07- 05 19:59)- with 0 comment(s)

This is just a quick fix for yesterday's release. Now mutt-nntp depends on mutt >= 1.5.21-5. This should allow having mutt-nntp installed with the standard distribution mutt package for both Debian and Ubuntu (please report any problems).

If you have Ubuntu 12.04 (Precise) and amd64 or i386 arch, just download and install the provided packages.

For other setups, here are the quick (copy&paste) instructions:

ver=1.5.21

deb_ver=$ver-5nntp3

url=https://llucax.com.nyud.net/proj/mutt-nntp-debian/files/latest

wget $url/mutt_$deb_ver.dsc $url/mutt_$deb_ver.diff.gz \

http://ftp.de.debian.org/debian/pool/main/m/mutt/mutt_$ver.orig.tar.gz

sudo apt-get build-dep mutt

dpkg-source -x mutt_$deb_ver.dsc

cd mutt-$ver

dpkg-buildpackage -rfakeroot

# install any missing packages reported by dpkg-buildpackage and try again

cd ..

sudo dpkg -i mutt-nntp_${deb_ver}_*.deb

Now you can enjoy reading your favourite newsgroups and your favourite mailing lists via Gmane with Mutt without leaving the beauty of your packaging system. No need to thank me, I'm glad to be helpful (but if you want to make a donation, just let me know ;).

Note

You should always install the same mutt version as the one the mutt-nntp is based on (i.e. the version number without the nntpX suffix, for example if mutt-nntp version is 1.5.21-5nntp1, your mutt version should be 1.5.21-5 or 1.5.21-5ubuntu2). A newer version will satisfy the dependency too but it is not guaranteed to work (even when it probably will, specially if the upstream version is the same). You could also install the generated/provided mutt package, but that might be problematic when upgrading your distribution.

See the project page for more details.

Release: Mutt with NNTP Debian package 1.5.21-5nntp2

by Leandro Lucarella on 2012- 07- 04 17:24 (updated on 2012- 07- 04 17:24)- with 0 comment(s)

A new version of Mutt with NNTP support is available. This version only moves Mutt with NNTP support to a separate package in the hopes of having a smoother interaction with the distribution packages (avoiding automatic updates with less hassle). Now a new mutt-nntp package is generated.

If you have Ubuntu 12.04 (Precise) and amd64 or i386 arch, just download and install the provided packages.

For other setups, here are the quick (copy&paste) instructions:

ver=1.5.21

deb_ver=$ver-5nntp2

url=https://llucax.com.nyud.net/proj/mutt-nntp-debian/files/latest

wget $url/mutt_$deb_ver.dsc $url/mutt_$deb_ver.diff.gz \

http://ftp.de.debian.org/debian/pool/main/m/mutt/mutt_$ver.orig.tar.gz

sudo apt-get build-dep mutt

dpkg-source -x mutt_$deb_ver.dsc

cd mutt-$ver

dpkg-buildpackage -rfakeroot

# install any missing packages reported by dpkg-buildpackage and try again

cd ..

sudo dpkg -i mutt-nntp_${deb_ver}_*.deb

Now you can enjoy reading your favourite newsgroups and your favourite mailing lists via Gmane with Mutt without leaving the beauty of your packaging system. No need to thank me, I'm glad to be helpful (but if you want to make a donation, just let me know ;).

Note

You should always install the same mutt version as the one the mutt-nntp is based on (i.e. the version number without the nntpX suffix, for example if mutt-nntp version is 1.5.21-5nntp1, your mutt version should be 1.5.21-5). I'm not forcing that in the dependencies because in general it shouldn't be a big issue using an older version. You could also install the generated/provided mutt package, but that might be problematic when upgrading your distribution.

See the project page for more details.

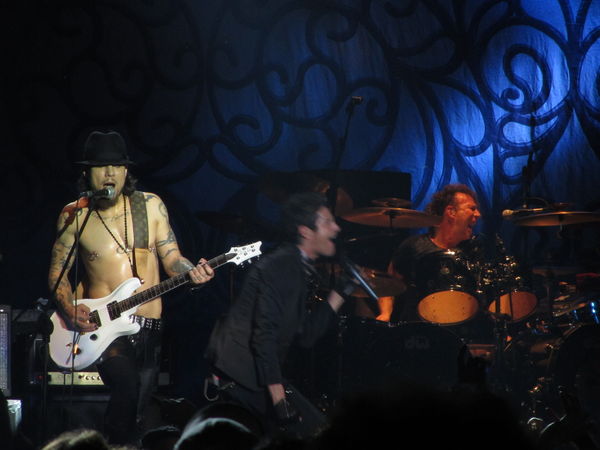

The Shins @ Huxley's Neue Welt (2012-03-28)

by Leandro Lucarella on 2012- 07- 03 11:01 (updated on 2012- 07- 03 11:01)- with 0 comment(s)

-01.mini.jpg)

The Shins @ Huxley's Neue Welt (2012-03-28) (1)

-02.mini.jpg)

The Shins @ Huxley's Neue Welt (2012-03-28) (2)

-03.mini.jpg)

The Shins @ Huxley's Neue Welt (2012-03-28) (3)

-04.mini.jpg)

The Shins @ Huxley's Neue Welt (2012-03-28) (4)

Querying N900 address book

by Leandro Lucarella on 2012- 07- 02 20:49 (updated on 2012- 07- 02 20:49)- with 0 comment(s)

Since there is not a lot of information on how to hack Maemo's address book to find some contacts with a mobile phone number, I'll share my findings.

Since setting up an environment to cross-compile for ARM is a big hassle, I decided to write this small test program in Python, (ab)using the wonderful ctypes module to avoid compiling at all.

Here is a very small script to use the (sadly proprietary) OSSO Addressbook library:

# This function get all the names in the address book with mobile phone numbers

# and print them. The code is Python but is as similar as C as possible.

def get_all_mobiles():

osso_ctx = osso_initialize("test_abook", "0.1", FALSE)

osso_abook_init(argc, argv, hash(osso_ctx))

roster = osso_abook_aggregator_get_default(NULL)

osso_abook_waitable_run(roster, g_main_context_default(), NULL)

contacts = osso_abook_aggregator_list_master_contacts(roster)

for contact in glist(contacts):

name = osso_abook_contact_get_display_name(contact)

# Somehow hackish way to get the EVC_TEL attributes

field = e_contact_field_id("mobile-phone")

attrs = e_contact_get_attributes(contact, field)

mobiles = []

for attr in glist(attrs):

types = e_vcard_attribute_get_param(attr, "TYPE")

for t in glist(types):

type = ctypes.c_char_p(t).value

# Remove this condition to get all phone numbers

# (not just mobile phones)

if type == "CELL":

mobiles.append(e_vcard_attribute_get_value(attr))

if mobiles:

print name, mobiles

# Python

import sys

import ctypes

# be sure to import gtk before calling osso_abook_init()

import gtk

import osso

osso_initialize = osso.Context

# Dynamic libraries bindings

glib = ctypes.CDLL('libglib-2.0.so.0')

g_main_context_default = glib.g_main_context_default

def glist(addr):

class _GList(ctypes.Structure):

_fields_ = [('data', ctypes.c_void_p),

('next', ctypes.c_void_p)]

l = addr

while l:

l = _GList.from_address(l)

yield l.data

l = l.next

osso_abook = ctypes.CDLL('libosso-abook-1.0.so.0')

osso_abook_init = osso_abook.osso_abook_init

osso_abook_aggregator_get_default = osso_abook.osso_abook_aggregator_get_default

osso_abook_waitable_run = osso_abook.osso_abook_waitable_run

osso_abook_aggregator_list_master_contacts = osso_abook.osso_abook_aggregator_list_master_contacts

osso_abook_contact_get_display_name = osso_abook.osso_abook_contact_get_display_name

osso_abook_contact_get_display_name.restype = ctypes.c_char_p

ebook = ctypes.CDLL('libebook-1.2.so.5')

e_contact_field_id = ebook.e_contact_field_id

e_contact_get_attributes = ebook.e_contact_get_attributes

e_vcard_attribute_get_value = ebook.e_vcard_attribute_get_value

e_vcard_attribute_get_value.restype = ctypes.c_char_p

e_vcard_attribute_get_param = ebook.e_vcard_attribute_get_param

# argc/argv adaption

argv_type = ctypes.c_char_p * len(sys.argv)

argv = ctypes.byref(argv_type(*sys.argv))

argc = ctypes.byref(ctypes.c_int(len(sys.argv)))

# C-ish aliases

NULL = None

FALSE = False

# Run the test

get_all_mobiles()

Here are some useful links I used as reference:

- http://wiki.maemo.org/PyMaemo/Accessing_APIs_without_Python_bindings

- http://wiki.maemo.org/Documentation/Maemo_5_Developer_Guide/Using_Generic_Platform_Components/Using_Address_Book_API

- http://maemo.org/api_refs/5.0/5.0-final/libebook/

- http://maemo.org/api_refs/5.0/5.0-final/libosso-abook/

- https://garage.maemo.org/plugins/scmsvn/viewcvs.php/releases/evolution-data-server/1.4.2.1-20091104/addressbook/libebook-dbus/?root=eds

- http://www.developer.nokia.com/Community/Discussion/showthread.php?200914-How-to-print-all-contact-names-in-N900-addressbook-Please-Help

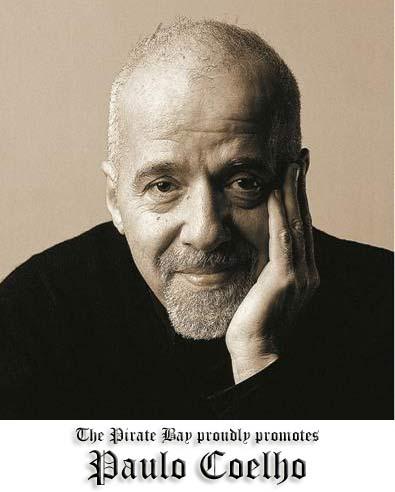

The Pirate Cohelo

by Leandro Lucarella on 2012- 01- 30 20:34 (updated on 2012- 01- 30 20:34)- with 0 comment(s)

Nice post by Paulo Coelho promoting the piracy of his own books via The Pirate Bay (whom reciprocally returns the favor).

The Pirate Bay starts today a new and interesting system to promote arts.

Do you have a band? Are you an aspiring movie producer? A comedian? A cartoon artist?

They will replace the front page logo with a link to your work.

As soon as I learned about it, I decided to participate. Several of my books are there, and as I said in a previous post, My thoughts on SOPA, the physical sales of my books are growing since my readers post them in P2P sites.

Welcome to download my books for free and, if you enjoy them, buy a hard copy – the way we have to tell to the industry that greed leads to nowhere.

Love

The Pirate Coelho

Go, search, download, read and if you like them, buy or show your appreciation in another way.

It's finally here

by Leandro Lucarella on 2012- 01- 16 08:30 (updated on 2012- 01- 16 08:30)- with 0 comment(s)

More adventures with the N900

by Leandro Lucarella on 2011- 12- 18 19:30 (updated on 2011- 12- 18 19:30)- with 0 comment(s)

OK, after I recovered my phone without needing to reflash once, I was even much closer to do it again because of a new problem.

After missing an appointment and arriving at work about 3 hours late, I realized my phone stopped reproducing sound and vibrating when an alarm was fired. At first I thought I put the alarm incorrectly but then I verified that the alarm was not working. I still got a popup with the alarm message, but no sound or vibration.

So... Time to debug the problem. After searching a lot, I couldn't find anybody with my same problem, I found similar, but not the same, so I decided to report a bug. I got a very fast but useless response. Great!

Making long story short, I finally found some IRC channels and mailing lists where I could find a more opensourceish support that the one provided in the forums and bugzilla. So I'm happy I finally found a place where you can talk to actual developers.

I commented my problem and just after a very trivial but extremely useful suggestion (installing syslogd), I could trace the origin or the problem and fix it (I just love you strace!).

I also had another problem, suddenly the skype calls stopped working. Again the syslog helped a lot. Unfortunately I didn't save the exact syslog error message, but it was something like:

GStreamer - Could not convert static caps "!`phmcadion/x-rtp, media=(string)video, payload=(int)[ 96, 127 ], clock-rate=(int)[ 1, 2147483647 ], encoding-name=(string)MP4V-ES"

As the MIME TYPE looked like garbage, I just grep(1)ed the filesystem searching for that string, and I found some binary file at /home/user/.gstreamer-0.10/registry.arm.bin. I backed up the file, remove it, and everything started working again (the file was recreated but with a very different content).

I have no idea how the symlink or the gstreamer file got broken, except maybe because of the unexpected reboot because of the broken batterypatch, but still, is really strange.

Anyway... Lessons learned:

- Maemo (Nokia) bugzilla is useless for getting help

- Install syslogd to debug Nokia N900 problems

- The maemo developers mailing list is your friend

Conclusion: Reflash my ass!

How to rescue your Nokia N900 without reflashing

by Leandro Lucarella on 2011- 12- 11 16:40 (updated on 2011- 12- 11 16:40)- with 0 comment(s)

I bought a Nokia N900 recently, a great toy if you like to have a phone with a Linux distribution that uses dpkg as package manager :)

Of course you can use it as an end user, and never find out, but as the geek I am, I had to hack it, and use the devel package repositories. Of course, with that comes the problems (and the fun! :D).

The last update of the batterypatch package came with a weird feature. The device rebooted itself each time it starts, leaving it in a restart loop that rendered the device unusable.

Searching for valuable information was not easy (thanks forums! You SUCK at organizing information... I miss mailing lists).

Anyway, I hope I can save some work to someone if you get in a similar situation, so you don't have to waste ours searching the Maemo Forums.

First you will need a tool to flash the phone (it can do other things besides flashing it, I used the maemo_flasher-3.5_2.5.2.2_i386.deb file). You can also check some instructions on how to load a (very) basic rescue image (from Meego). The good thing is this image is an initrd that's loaded in MEMORY, so you don't loose anything if you tried, the device goes to it's previous state (broken in my case :P) after a reboot.

What this image can do is put the device in USB mass storage mode (the embedded MMC -eMMC- and the external MMC). I've done this to backup my eMMC data, which holds the MyDocs vfat partition and the 2 GiB ext3 partition used to install optional software. You can also put the device in USB networking mode, you can get a shell console (and reboot/power off the device), but I found that pretty useless (because you don't have any useful tools, the backlit is not turned on, so is really hard to see anything, and because the kayboard doesn't have the function key mapped, so you can't even write a "/").

The bad thing about this image, is you can't access to the root filesystem (wich is stored in another NAND 256MiB memory). I wanted to access it for 2 reasons. First, I wanted to edit some files that the batterypatch program created to see if that fixed the rebooting problem. And if now, I wanted to make a backup of the rootfs so I didn't loose most of my customizations and installed software.

I first found that a way to access the rootfs was to install Meego in a uSD memory, but for that I needed a 4GiB uSD. Also it looked like too much work, it has to be something battery and easier to just mount the rootfs and play around.

And I finally found it. It was the hardest thing to found, that's why I not only passing you the original link, I'm also hosting my own copy because I have the feeling it can disappear any time! :P

This image let's you do all the same the other image can, but it turns on the backlit, it has better support for the keyboard (you can type a "/") and it can mount the UBI root filesystem. Even more, it comes with a telnet daemon, so you can even do the rescue work remotely using USB networking ;)

You can see the instructions for some of the tasks, but here is how I did to be able to log in using telnet, which is not documented elsewhere that I know off. Once you have your image loaded:

You have to activate the USB networking in the device: /rescueOS/usbnetworking-enable.sh

Configure your host PC to assign an IP to usb0: sudo ip a add 192.168.2.14/24 dev usb0 && sudo ip link set usb0 up

Start the telnet daemon in the device: telnetd

I couldn't find out the root password, and since the initrd root filesystem is read-only, so I did this to change the root password:

cp -a /etc /run/ mount --bind /run/etc /etc passwd

Now type the new root password.

That's it, log in via telnet from the host PC: telnet 192.168.2.15 and have fun!

With this I just could edit the broken files and saved the device without even needing to reflash it, but if you're not so lucky, you can just backup the root filesystem and reflash using this instructions (I didn't tested them, but seems pretty official).

Now I should probably have to try the recovery-boot package, if it works well it might be even easier to rescue the phone using that ;)

GML

by Leandro Lucarella on 2011- 07- 23 22:48 (updated on 2011- 07- 23 22:48)- with 0 comment(s)

Graffiti Markup Language is...

An universal, XML based, open file format designed to store graffiti motion data (x and y coordinates and time). The format is designed to maximize readability and ease of implementation, even for hobbyist programmers, artists and graffiti writers. Popular applications currently implementing GML include Graffiti Analysis and EyeWriter. Beyond storing data, a main goal of GML is to spark interest surrounding the importance (and fun) of open data and introduce open source collaborations to new communities. GML is intended to be a simple bridge between ink and code, promoting collaborations between graffiti writers and hackers.

An probably the funniest part:

GML is today’s new digital standard for tomorrow’s vandals.

The Black Keys - Howlin' For You

by Leandro Lucarella on 2011- 07- 22 23:34 (updated on 2011- 07- 22 23:34)- with 0 comment(s)

Interesting music video by The Black Keys shaped as a movie trailer.

Tilt

by Leandro Lucarella on 2011- 07- 20 19:35 (updated on 2011- 07- 20 19:35)- with 0 comment(s)

Tilt is a Firefox extension that lets you visualize any web page DOM tree in 3D.

Via Mozilla hacks.

Futurama just keeps getting better and better

by Leandro Lucarella on 2011- 07- 10 21:04 (updated on 2011- 07- 10 21:04)- with 0 comment(s)

Fragment of Law and Oracle episode (S06E17/6ACV16):

10 delirious songs with strange vocals

by Leandro Lucarella on 2011- 07- 06 03:38 (updated on 2011- 07- 06 03:38)- with 0 comment(s)

10 canciones delirantes con voces extrañas

[Grooveshark murió y con él esta lista]

Harvie Krumpet

by Leandro Lucarella on 2011- 07- 04 14:53 (updated on 2011- 07- 04 14:53)- with 0 comment(s)

Harvey Krumpet (es) by Adam Elliot

(or you can see it in 1 part only in English without Spanish subtitles here)

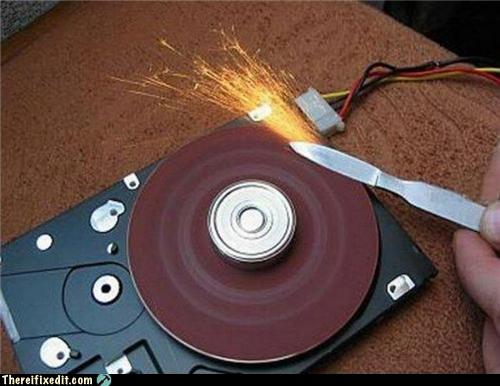

There, I fixed it!

by Leandro Lucarella on 2011- 07- 04 02:17 (updated on 2011- 07- 04 02:17)- with 0 comment(s)

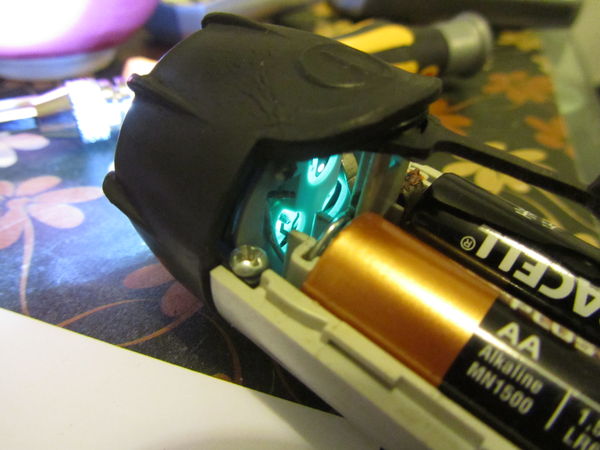

My cheap bike front light is not very water resistant...

WikiLeaks banking blockage advertisement

by Leandro Lucarella on 2011- 06- 29 20:50 (updated on 2011- 06- 29 20:50)- with 0 comment(s)

Two dogs dining in a busy restaurant

by Leandro Lucarella on 2011- 06- 24 15:49 (updated on 2011- 06- 24 15:49)- with 0 comment(s)

Berlin

by Leandro Lucarella on 2011- 06- 16 16:19 (updated on 2011- 06- 16 16:19)- with 0 comment(s)

Babasónicos - Dopádromo

by Leandro Lucarella on 2011- 06- 13 02:02 (updated on 2011- 06- 13 02:02)- with 0 comment(s)

I was just listening to Dopádromo from Babasónicos and thinking: what a good album!

The Architecture of Open Source Applications

by Leandro Lucarella on 2011- 06- 07 00:56 (updated on 2011- 06- 07 00:56)- with 0 comment(s)

The Architecture of Open Source Applications

Architects look at thousands of buildings during their training, and study critiques of those buildings written by masters. In contrast, most software developers only ever get to know a handful of large programs well—usually programs they wrote themselves—and never study the great programs of history. As a result, they repeat one another's mistakes rather than building on one another's successes.

This book's goal is to change that. In it, the authors of twenty-five open source applications explain how their software is structured, and why. What are each program's major components? How do they interact? And what did their builders learn during their development? In answering these questions, the contributors to this book provide unique insights into how they think.

If you are a junior developer, and want to learn how your more experienced colleagues think, this book is the place to start. If you are an intermediate or senior developer, and want to see how your peers have solved hard design problems, this book can help you too.

I hope I can find the time to read this (at least some chapters).

It's ALIVE!

by Leandro Lucarella on 2011- 06- 05 00:45 (updated on 2011- 06- 05 00:45)- with 2 comment(s)

Thank you Garrett, you saved my bike! =D

Today I got, after looking for one for several months, my derailleur hanger!

Raleigh (both Argentina and USA) were completely unhelpful, so I got to find it somewhere else, and finally found one at bicyclederailleurhangers.com (mine is #22 =).

I hope tomorrow I can finally fix my bike.

/me happy!

Beastie Boys - Fight For Your Right (Revisited)

by Leandro Lucarella on 2011- 05- 28 22:06 (updated on 2011- 05- 28 22:06)- with 0 comment(s)

Lime total.

Never leave your e-mail to Fujitsu

by Leandro Lucarella on 2011- 05- 25 17:26 (updated on 2011- 05- 25 17:26)- with 0 comment(s)

Fujitsu I hate you. I usually use an alias when putting my e-mail in some company form, using the feature most MTAs have to use something like myrealuser-<somealias>@example.com.

Lately I've been receiving tons of spam from luca-fujitsu@... and the kind of spam my Bogofilter have a hard time to swallow, so it's becoming really annoying. I'll just see how to make luca-fujitsu@... and invalid e-mail and reject all mails delivered to it.

Fuck off Fujitsu!

Release: Mutt with NNTP Debian package 1.5.21-5nntp1

by Leandro Lucarella on 2011- 05- 24 19:59 (updated on 2011- 05- 24 19:59)- with 0 comment(s)

I've updated my Mutt Debian package with the NNTP patch to the latest Debian Mutt package.

This release is to bring just the regular bugfixing round from Debian.

If you have Debian testing/unstable and amd64 or i386 arch, just download and install the provided packages.

For other setups, here are the quick (copy&paste) instructions:

ver=1.5.21

deb_ver=$ver-5nntp1

url=https://llucax.com.nyud.net/proj/mutt-nntp-debian/files/latest

wget $url/mutt_$deb_ver.dsc $url/mutt_$deb_ver.diff.gz \

http://ftp.de.debian.org/debian/pool/main/m/mutt/mutt_$ver.orig.tar.gz

sudo apt-get build-dep mutt

dpkg-source -x mutt_$deb_ver.dsc

cd mutt-$ver

dpkg-buildpackage -rfakeroot

# install any missing packages reported by dpkg-buildpackage and try again

cd ..

sudo dpkg -i mutt_${deb_ver}_*.deb mutt-patched_${deb_ver}_*.deb

See the project page for more details.

10 songs of...

by Leandro Lucarella on 2011- 05- 23 22:40 (updated on 2011- 05- 23 22:40)- with 0 comment(s)

New section, let's see for how long I can keep this up =)

The idea is to make a compilation of 10 songs of (somehow) unknown music (and/or bands) picking up a theme. At first I'll try to pick only local (Argentinian) bands, but maybe in the future I can go more global.