Bitcoin: p2p virtual currency

by Leandro Lucarella on 2011- 05- 17 00:04 (updated on 2011- 05- 17 00:04)- with 1 comment(s)

Bitcoin is one of the most subversive ideas I ever read, it's as scary as exciting in how it could change the world economy dynamics if it works.

Bitcoin is (quoting WeUseCoins.com):

- Decentralized

- Bitcoin is the first digital currency that is completely distributed. The network is made up of users like yourself so no bank or payment processor is required between you and whoever you're trading with. This decentralization is the basis for Bitcoin's security and freedom.

- Worldwide

- Your Bitcoins can be accessed from anywhere with an Internet connection. Anybody can start mining, buying, selling or accepting Bitcoins regardless of their location.

- No small print

If you have Bitcoins, you can send them to anyone else with a Bitcoin address. There are no limits, no special rules to follow or forms to fill out.

More complex types of transactions can be built on top of Bitcoin as well, but sometimes you just want to send money from A to B without worrying about limits and policies.

- Very low fees

- Currently you can send Bitcoin transactions for free. However, a fee on the order of 1 bitcent will eventually be necessary for your transaction to be processed more quickly. Miners compete on fees, which ensures that they will always stay low in the long run. More on transaction fees (Bitcoin Wiki).

- Own your money!

You don't have to be a criminal to wake up one day and find your account has been frozen. Rules vary from place to place, but in most jurisdictions accounts may be frozen by credit card collection agencies, by a spouse filing for divorce, by mistake or for terms of service violations.

In contrast, Bitcoins are like cash - seizing them requires access to your private keys, which could be placed on a USB stick, thereby enjoying the full legal and practical protections of physical property.

Here is a video, if you are too lazy to read:

If you want some more detailed information, there is a paper describing the technical side of the project (which I read and didn't fully understand, to be honest).

You have to add bitcoin mining to the equation. Which is not very well explained there. Bitcoin mining is a business, just like gold mining is. You need resources to do it, and if you don't do it efficiently, you'll loose money (the electricity and hardware cost will supersede what you're earning).

Quoting again:

The mining difficulty expresses how much harder the current block is to generate compared to the first block. So a difficulty of 70000 means to generate the current block you have to do 70000 times more work than Satoshi had to do generating the first block. Though be fair though, back then mining was a lot slower and less optimized.

The difficulty changes every 2016 blocks. The network tries to change it such that 2016 blocks at the current global network processing power take about 14 days. That's why, when the network power rises, the difficulty rises as well.

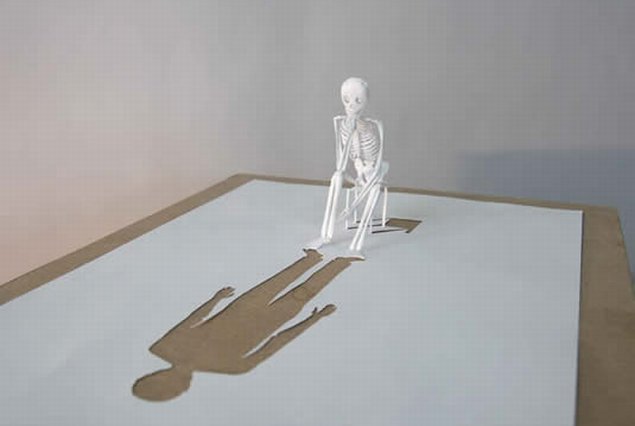

Breathtaking Sculptures Made Out of A Single Paper Sheet

by Leandro Lucarella on 2010- 06- 03 22:50 (updated on 2010- 06- 04 04:17)- with 2 comment(s)

Immix mark-region garbage collector

by Leandro Lucarella on 2009- 04- 25 20:39 (updated on 2009- 04- 25 20:39)- with 0 comment(s)

Yesterday Fawzi Mohamed pointed me to a Tango forums post (<rant>god! I hate forums</rant> =) where Keith Nazworth announces he wants to start a new GC implementation in his spare time.

He wants to progressively implement the Immix Garbage Collector.

I read the paper and it looks interesting, and it looks like it could use the parallelization I plan to add to the current GC, so maybe our efforts can be coordinated to leave the possibility to integrate both improvements together in the future.

A few words about the paper: the heap organization is pretty similar to the one in the current GC implementation, except Immix proposes that pages should not be divided in fixed-size bins, but do pointer bump variable sized allocations inside a block. Besides that, all other optimizations that I saw in the paper are somehow general and can be applied to the current GC at some point (but some of them maybe don't fit as well). Among these optimizations are: opportunistic moving to avoid fragmentation, parallel marking, thread-local pools/allocator and generations. Almost all of the optimizations can be implemented incrementally, starting with a very basic collector which is not very far from the actual one.

There were some discussion on adding the necessary hooks to the language to allow a reference counting based garbage collector in the newsgroup (don't be fooled by the subject! Is not about disabling the GC =) and weak references implementation. There's a lot of discussion about GC lately in D, which is really exciting!

Accurate Garbage Collection in an Uncooperative Environment

by Leandro Lucarella on 2009- 03- 21 20:23 (updated on 2009- 03- 22 03:05)- with 0 comment(s)

I just read Accurate Garbage Collection in an Uncooperative Environment paper.

Unfortunately this paper try to solve mostly problems D don't see as problems, like portability (targeting languages that emit C code instead of native machine code, like the Mercury language mentioned in the paper). Based on the problem of tracing the C stack in a portable way, it suggests to inject some code to functions to construct a linked list of stack information (which contains local variables information) to be able to trace the stack in an accurate way.

I think none of the ideas presented by this paper are suitable for D, because the GC already can trace the stack in D (in an unportable way, but it can), and it can get the type info from better places too.

In terms of (time) performance, benchmarks shows that is a little worse than Boehm (et al) GC, but they argue that Boehm has years of fine grained optimizations and it's tightly coupled with the underlying architecture while this new approach is almost unoptimized yet and it's completely portable.

The only thing it mentions that could apply to D (and any conservative GC in general) is the issues that compiler optimizations can introduce. But I'm not aware of any of this issues, so I can't say anything about it.

In case you wonder, I've added this paper to my papers playground page =)

Update

I think I missed the point with this paper. Current D GC can't possibly do accurate tracing of the stack, because there is no way to get a type info from there (I was thinking only in the heap, where some degree of accuracy is achieved by setting the noscan bit for a bin that don't have pointers, as mentioned in my previous post).

So this paper could help getting accurate GC into D, but it doesn't seems a great deal when you can add type information about local variables when emitting machine code instead of adding the shadow stack linked list. The only advantage I see is that I think it should be possible to implement the linked list in the front-end.